12 KiB

Prometheus integration

Introduced in GitLab 9.0.

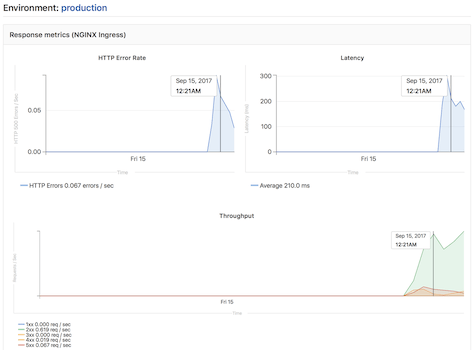

GitLab offers powerful integration with Prometheus for monitoring key metrics of your apps, directly within GitLab. Metrics for each environment are retrieved from Prometheus, and then displayed within the GitLab interface.

There are two ways to set up Prometheus integration, depending on where your apps are running:

- For deployments on Kubernetes, GitLab can automatically deploy and manage Prometheus.

- For other deployment targets, simply specify the Prometheus server.

Once enabled, GitLab will automatically detect metrics from known services in the metric library. You are also able to add your own metrics as well.

Enabling Prometheus Integration

Managed Prometheus on Kubernetes

Note

: Introduced in GitLab 10.5

GitLab can seamlessly deploy and manage Prometheus on a connected Kubernetes cluster, making monitoring of your apps easy.

Requirements

- A connected Kubernetes cluster

- Helm Tiller installed by GitLab

Getting started

Once you have a connected Kubernetes cluster with Helm installed, deploying a managed Prometheus is as easy as a single click.

- Go to the Operations > Kubernetes page to view your connected clusters

- Select the cluster you would like to deploy Prometheus to

- Click the Install button to deploy Prometheus to the cluster

About managed Prometheus deployments

Prometheus is deployed into the gitlab-managed-apps namespace, using the official Helm chart. Prometheus is only accessible within the cluster, with GitLab communicating through the Kubernetes API.

The Prometheus server will automatically detect and monitor nodes, pods, and endpoints. To configure a resource to be monitored by Prometheus, simply set the following Kubernetes annotations:

prometheus.io/scrapetotrueto enable monitoring of the resource.prometheus.io/portto define the port of the metrics endpoint.prometheus.io/pathto define the path of the metrics endpoint. Defaults to/metrics.

CPU and Memory consumption is monitored, but requires naming conventions in order to determine the environment. If you are using Auto DevOps, this is handled automatically.

The NGINX Ingress that is deployed by GitLab to clusters, is automatically annotated for monitoring providing key response metrics: latency, throughput, and error rates.

Manual configuration of Prometheus

Requirements

Integration with Prometheus requires the following:

- GitLab 9.0 or higher

- Prometheus must be configured to collect one of the supported metrics

- Each metric must be have a label to indicate the environment

- GitLab must have network connectivity to the Prometheus server

Getting started

Installing and configuring Prometheus to monitor applications is fairly straight forward.

- Install Prometheus

- Set up one of the supported monitoring targets

- Configure the Prometheus server to collect their metrics

Configuration in GitLab

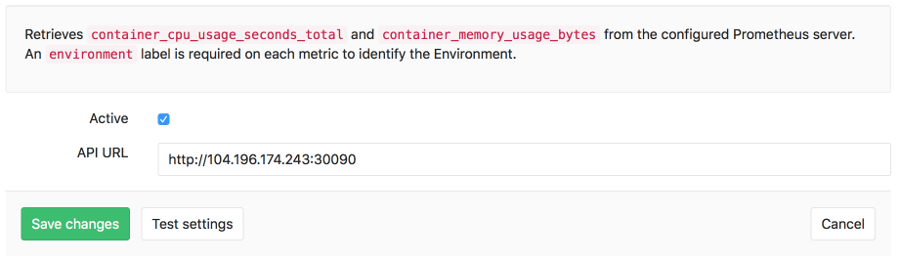

The actual configuration of Prometheus integration within GitLab is very simple. All you will need is the DNS or IP address of the Prometheus server you'd like to integrate with.

- Navigate to the Integrations page

- Click the Prometheus service

- Provide the base URL of the your server, for example

http://prometheus.example.com/. The Test Settings button can be used to confirm connectivity from GitLab to the Prometheus server.

Monitoring CI/CD Environments

Once configured, GitLab will attempt to retrieve performance metrics for any environment which has had a successful deployment.

GitLab will automatically scan the Prometheus server for metrics from known servers like Kubernetes and NGINX, and attempt to identify individual environment. The supported metrics and scan process is detailed in our Prometheus Metrics Library documentation.

You can view the performance dashboard for an environment by clicking on the monitoring button.

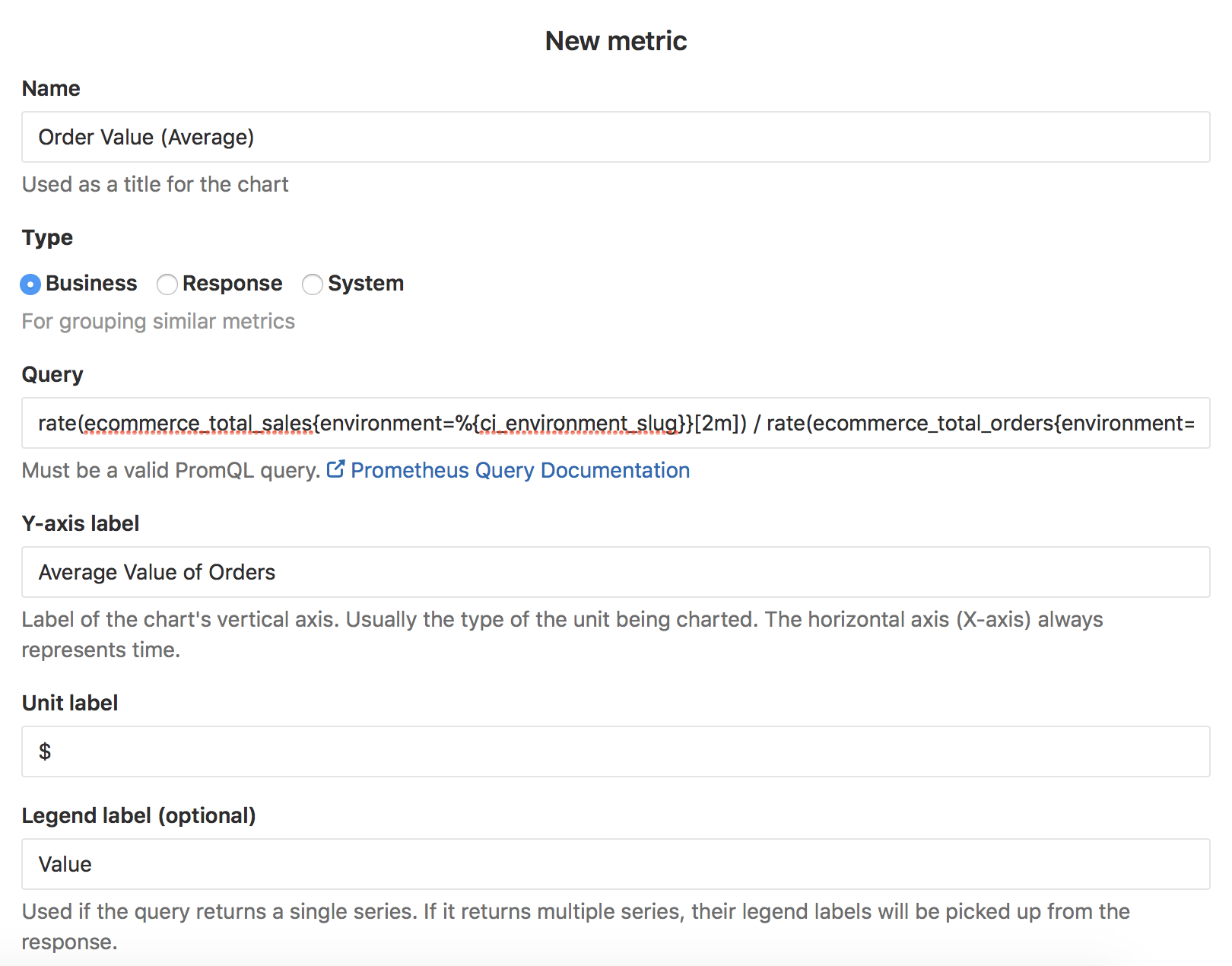

Adding additional metrics [PREMIUM]

Introduced in GitLab Premium 10.6.

Additional metrics can be monitored by adding them on the Prometheus integration page. Once saved, they will be displayed on the environment performance dashboard.

A few fields are required:

- Name: Chart title

- Type: Type of metric. Metrics of the same type will be shown together.

- Query: Valid PromQL query.

- Y-axis label: Y axis title to display on the dashboard.

- Unit label: Query units, for example

req / sec. Shown next to the value.

Multiple metrics can be displayed on the same chart if the fields Name, Type, and Y-axis label match between metrics. For example, a metric with Name Requests Rate, Type Business, and Y-axis label rec / sec would display on the same chart as a second metric with the same values. A Legend label is suggested if this feature used.

Query Variables

GitLab supports a limited set of CI variables in the Prometheus query. This is particularly useful for identifying a specific environment, for example with CI_ENVIRONMENT_SLUG. The supported variables are:

- CI_ENVIRONMENT_SLUG

- KUBE_NAMESPACE

To specify a variable in a query, enclose it in curly braces with a leading percent. For example: %{ci_environment_slug}.

Setting up alerts for Prometheus metrics [ULTIMATE]

Managed Prometheus instances

Introduced in GitLab Ultimate 11.2 for custom metrics, and 11.3 for library metrics.

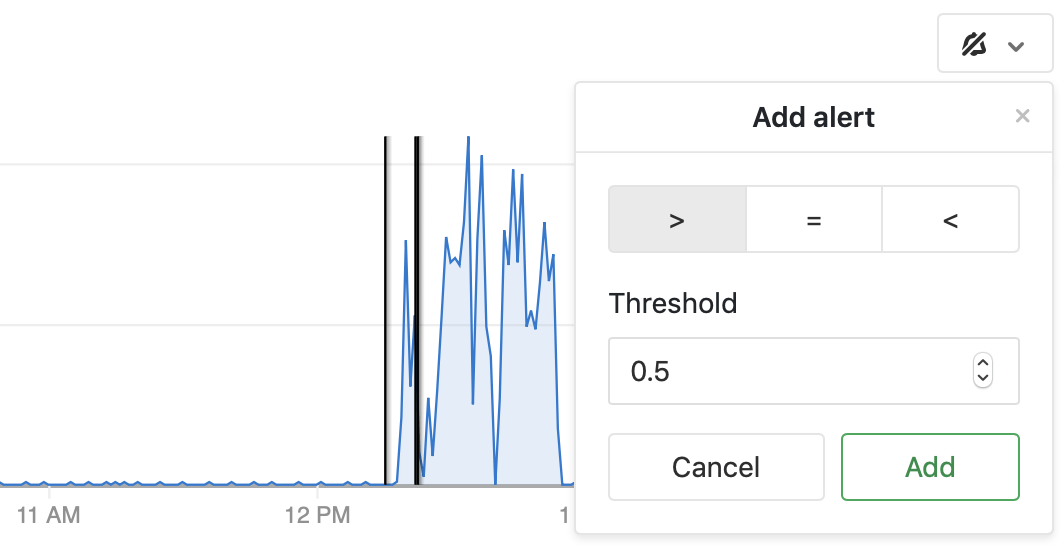

For managed Prometheus instances using auto configuration, alerts for metrics can be configured directly in the performance dashboard.

To set an alert, click on the alarm icon in the top right corner of the metric you want to create the alert for. A dropdown will appear, with options to set the threshold and operator. Click Add to save and activate the alert.

To remove the alert, click back on the alert icon for the desired metric, and click Delete.

External Prometheus instances

Introduced in GitLab Ultimate 11.8.

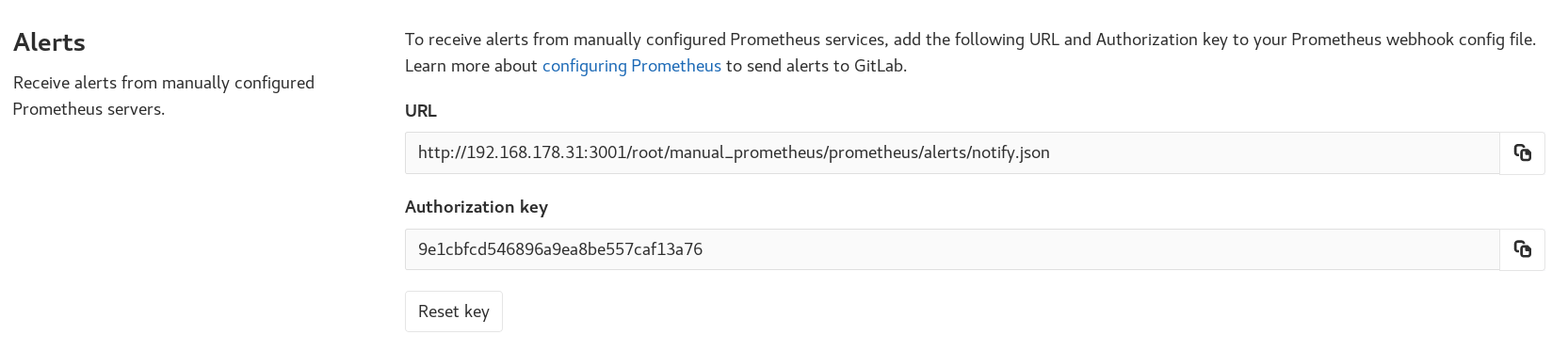

For manually configured Prometheus servers, a notify endpoint is provided to use with Prometheus webhooks. If you have manual configuration enabled, an Alerts section is added to Settings > Integrations > Prometheus. This contains the URL and Authorization Key. The Reset Key button will invalidate the key and generate a new one.

To send GitLab alert notifications, copy the URL and Authorization Key into the webhook_configs section of your Prometheus Alertmanager configuration:

receivers:

name: gitlab

webhook_configs:

- http_config:

bearer_token: 9e1cbfcd546896a9ea8be557caf13a76

send_resolved: true

url: http://192.168.178.31:3001/root/manual_prometheus/prometheus/alerts/notify.json

...

Taking action on incidents [ULTIMATE]

Introduced in GitLab Ultimate 11.11.

Alerts can be used to trigger actions, like open an issue automatically. To configure the actions:

- Navigate to your project's Settings > Operations > Incidents.

- Enable the option to create issues.

- Choose the issue template to create the issue from.

- Optionally, select whether to send an email notification to the developers of the project.

- Click Save changes.

Once enabled, an issue will be opened automatically when an alert is triggered. The author of the issue will be the GitLab Alert Bot. To further customize the issue, you can add labels, mentions, or any other supported quick action in the selected issue template.

If the metric exceeds the threshold of the alert for over 5 minutes, an email will be sent to all Maintainers and Owners of the project.

Determining the performance impact of a merge

Introduced in GitLab 9.2. GitLab 9.3 added the numeric comparison of the 30 minute averages. Requires Kubernetes metrics

Developers can view the performance impact of their changes within the merge request workflow. When a source branch has been deployed to an environment, a sparkline and numeric comparison of the average memory consumption will appear. On the sparkline, a dot indicates when the current changes were deployed, with up to 30 minutes of performance data displayed before and after. The comparison shows the difference between the 30 minute average before and after the deployment. This information is updated after each commit has been deployed.

Once merged and the target branch has been redeployed, the metrics will switch to show the new environments this revision has been deployed to.

Performance data will be available for the duration it is persisted on the Prometheus server.

Troubleshooting

If the "No data found" screen continues to appear, it could be due to:

- No successful deployments have occurred to this environment.

- Prometheus does not have performance data for this environment, or the metrics

are not labeled correctly. To test this, connect to the Prometheus server and

run a query, replacing

$CI_ENVIRONMENT_SLUGwith the name of your environment.