22 KiB

| stage | group | info | disqus_identifier | type |

|---|---|---|---|---|

| Verify | Continuous Integration | To determine the technical writer assigned to the Stage/Group associated with this page, see https://about.gitlab.com/handbook/engineering/ux/technical-writing/#designated-technical-writers | https://docs.gitlab.com/ee/ci/pipelines.html | reference |

CI/CD pipelines

Introduced in GitLab 8.8.

TIP: Tip: Watch the "Mastering continuous software development" webcast to see a comprehensive demo of a GitLab CI/CD pipeline.

Pipelines are the top-level component of continuous integration, delivery, and deployment.

Pipelines comprise:

- Jobs, which define what to do. For example, jobs that compile or test code.

- Stages, which define when to run the jobs. For example, stages that run tests after stages that compile the code.

Jobs are executed by runners. Multiple jobs in the same stage are executed in parallel, if there are enough concurrent runners.

If all jobs in a stage succeed, the pipeline moves on to the next stage.

If any job in a stage fails, the next stage is not (usually) executed and the pipeline ends early.

In general, pipelines are executed automatically and require no intervention once created. However, there are also times when you can manually interact with a pipeline.

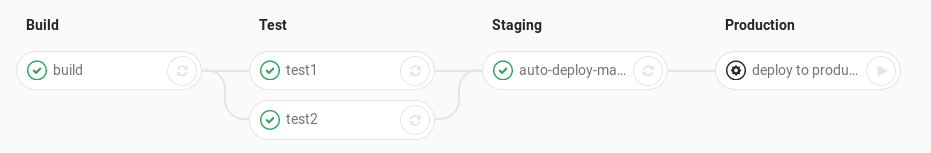

A typical pipeline might consist of four stages, executed in the following order:

- A

buildstage, with a job calledcompile. - A

teststage, with two jobs calledtest1andtest2. - A

stagingstage, with a job calleddeploy-to-stage. - A

productionstage, with a job calleddeploy-to-prod.

NOTE: Note: If you have a mirrored repository that GitLab pulls from, you may need to enable pipeline triggering in your project's Settings > Repository > Pull from a remote repository > Trigger pipelines for mirror updates.

Types of pipelines

Pipelines can be configured in many different ways:

- Basic pipelines run everything in each stage concurrently, followed by the next stage.

- Directed Acyclic Graph Pipeline (DAG) pipelines are based on relationships between jobs and can run more quickly than basic pipelines.

- Multi-project pipelines combine pipelines for different projects together.

- Parent-Child pipelines break down complex pipelines into one parent pipeline that can trigger multiple child sub-pipelines, which all run in the same project and with the same SHA.

- Pipelines for Merge Requests run for merge requests only (rather than for every commit).

- Pipelines for Merged Results are merge request pipelines that act as though the changes from the source branch have already been merged into the target branch.

- Merge Trains use pipelines for merged results to queue merges one after the other.

Configure a pipeline

Pipelines and their component jobs and stages are defined in the CI/CD pipeline configuration file for each project.

- Jobs are the basic configuration component.

- Stages are defined by using the

stageskeyword.

For a list of configuration options in the CI pipeline file, see the GitLab CI/CD Pipeline Configuration Reference.

You can also configure specific aspects of your pipelines through the GitLab UI. For example:

- Pipeline settings for each project.

- Pipeline schedules.

- Custom CI/CD variables.

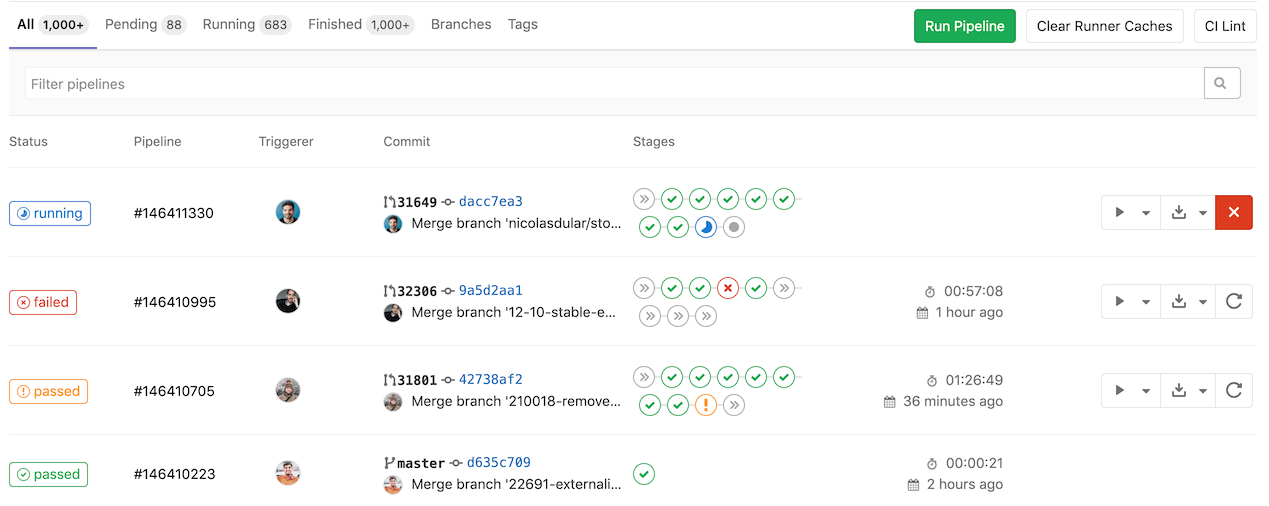

View pipelines

You can find the current and historical pipeline runs under your project's CI/CD > Pipelines page. You can also access pipelines for a merge request by navigating to its Pipelines tab.

Click a pipeline to open the Pipeline Details page and show the jobs that were run for that pipeline. From here you can cancel a running pipeline, retry jobs on a failed pipeline, or delete a pipeline.

Starting in GitLab 12.3, a link to the

latest pipeline for the last commit of a given branch is available at /project/pipelines/[branch]/latest.

Also, /project/pipelines/latest redirects you to the latest pipeline for the last commit

on the project's default branch.

Starting in GitLab 13.0, you can filter the pipeline list by:

- Trigger author

- Branch name

- Status (GitLab 13.1 and later)

- Tag (GitLab 13.1 and later)

Run a pipeline manually

Pipelines can be manually executed, with predefined or manually-specified variables.

You might do this if the results of a pipeline (for example, a code build) are required outside the normal operation of the pipeline.

To execute a pipeline manually:

- Navigate to your project's CI/CD > Pipelines.

- Select the Run Pipeline button.

- On the Run Pipeline page:

- Select the branch to run the pipeline for in the Create for field.

- Enter any environment variables required for the pipeline run.

- Click the Create pipeline button.

The pipeline now executes the jobs as configured.

Run a pipeline by using a URL query string

Introduced in GitLab 12.5.

You can use a query string to pre-populate the Run Pipeline page. For example, the query string

.../pipelines/new?ref=my_branch&var[foo]=bar&file_var[file_foo]=file_bar pre-populates the

Run Pipeline page with:

- Run for field:

my_branch. - Variables section:

- Variable:

- Key:

foo - Value:

bar

- Key:

- File:

- Key:

file_foo - Value:

file_bar

- Key:

- Variable:

The format of the pipelines/new URL is:

.../pipelines/new?ref=<branch>&var[<variable_key>]=<value>&file_var[<file_key>]=<value>

The following parameters are supported:

ref: specify the branch to populate the Run for field with.var: specify aVariablevariable.file_var: specify aFilevariable.

For each var or file_var, a key and value are required.

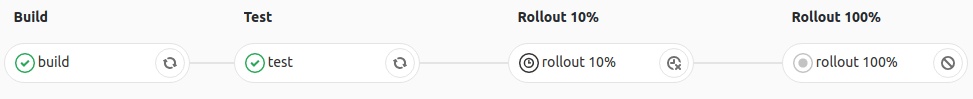

Add manual interaction to your pipeline

Introduced in GitLab 8.15.

Manual actions, configured using the when:manual parameter,

allow you to require manual interaction before moving forward in the pipeline.

You can do this straight from the pipeline graph. Just click the play button to execute that particular job.

For example, your pipeline might start automatically, but it requires manual action to

deploy to production. In the example below, the production

stage has a job with a manual action.

Start multiple manual actions in a stage

Introduced in GitLab 11.11.

Multiple manual actions in a single stage can be started at the same time using the "Play all manual" button. Once you click this button, each individual manual action is triggered and refreshed to an updated status.

This functionality is only available:

- For users with at least Developer access.

- If the stage contains manual actions.

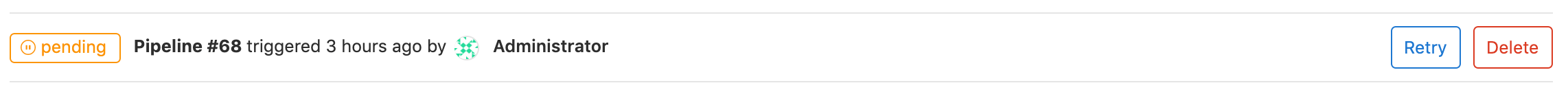

Delete a pipeline

Introduced in GitLab 12.7.

Users with owner permissions in a project can delete a pipeline by clicking on the pipeline in the CI/CD > Pipelines to get to the Pipeline Details page, then using the Delete button.

CAUTION: Warning: Deleting a pipeline expires all pipeline caches, and deletes all related objects, such as builds, logs, artifacts, and triggers. This action cannot be undone.

Pipeline quotas

Each user has a personal pipeline quota that tracks the usage of shared runners in all personal projects. Each group has a usage quota that tracks the usage of shared runners for all projects created within the group.

When a pipeline is triggered, regardless of who triggered it, the pipeline quota for the project owner's namespace is used. In this case, the namespace can be the user or group that owns the project.

How pipeline duration is calculated

Total running time for a given pipeline excludes retries and pending (queued) time.

Each job is represented as a Period, which consists of:

Period#first(when the job started).Period#last(when the job finished).

A simple example is:

- A (1, 3)

- B (2, 4)

- C (6, 7)

In the example:

- A begins at 1 and ends at 3.

- B begins at 2 and ends at 4.

- C begins at 6 and ends at 7.

Visually, it can be viewed as:

0 1 2 3 4 5 6 7

AAAAAAA

BBBBBBB

CCCC

The union of A, B, and C is (1, 4) and (6, 7). Therefore, the total running time is:

(4 - 1) + (7 - 6) => 4

Pipeline security on protected branches

A strict security model is enforced when pipelines are executed on protected branches.

The following actions are allowed on protected branches only if the user is allowed to merge or push on that specific branch:

- Run manual pipelines (using the Web UI or pipelines API).

- Run scheduled pipelines.

- Run pipelines using triggers.

- Run on-demand DAST scan.

- Trigger manual actions on existing pipelines.

- Retry or cancel existing jobs (using the Web UI or pipelines API).

Variables marked as protected are accessible only to jobs that run on protected branches, preventing untrusted users getting unintended access to sensitive information like deployment credentials and tokens.

Runners marked as protected can run jobs only on protected branches, preventing untrusted code from executing on the protected runner and preserving deployment keys and other credentials from being unintentionally accessed. In order to ensure that jobs intended to be executed on protected runners do not use regular runners, they must be tagged accordingly.

View jobs in a pipeline

When you access a pipeline, you can see the related jobs for that pipeline.

Clicking an individual job shows you its job log, and allows you to:

- Cancel the job.

- Retry the job.

- Erase the job log.

See why a job failed

Introduced in GitLab 10.7.

When a pipeline fails or is allowed to fail, there are several places where you can find the reason:

- In the pipeline graph, on the pipeline detail view.

- In the pipeline widgets, in the merge requests and commit pages.

- In the job views, in the global and detailed views of a job.

In each place, if you hover over the failed job you can see the reason it failed.

In GitLab 10.8 and later, you can also see the reason it failed on the Job detail page.

The order of jobs in a pipeline

The order of jobs in a pipeline depends on the type of pipeline graph.

- For regular pipeline graphs, jobs are sorted by name.

- For pipeline mini graphs, jobs are sorted by severity and then by name.

The order of severity is:

- failed

- warning

- pending

- running

- manual

- scheduled

- canceled

- success

- skipped

- created

For example:

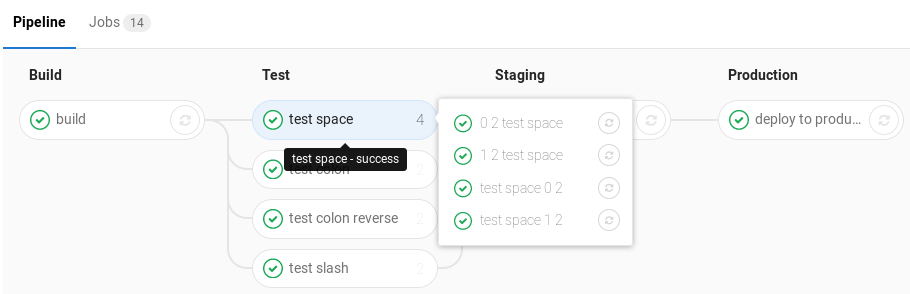

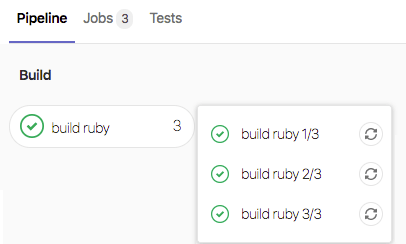

Group jobs in a pipeline

Introduced in GitLab 8.12.

If you have many similar jobs, your pipeline graph becomes long and hard to read.

You can automatically group similar jobs together. If the job names are formatted in a certain way, they are collapsed into a single group in regular pipeline graphs (not the mini graphs).

You can recognize when a pipeline has grouped jobs if you don't see the retry or cancel button inside them. Hovering over them shows the number of grouped jobs. Click to expand them.

To create a group of jobs, in the CI/CD pipeline configuration file, separate each job name with a number and one of the following:

- A slash (

/), for example,test 1/3,test 2/3,test 3/3. - A colon (

:), for example,test 1:3,test 2:3,test 3:3. - A space, for example

test 0 3,test 1 3,test 2 3.

You can use these symbols interchangeably.

In the example below, these three jobs are in a group named build ruby:

build ruby 1/3:

stage: build

script:

- echo "ruby1"

build ruby 2/3:

stage: build

script:

- echo "ruby2"

build ruby 3/3:

stage: build

script:

- echo "ruby3"

In the pipeline, the result is a group named build ruby with three jobs:

The jobs are be ordered by comparing the numbers from left to right. You usually want the first number to be the index and the second number to be the total.

This regular expression

evaluates the job names: \d+[\s:\/\\]+\d+\s*.

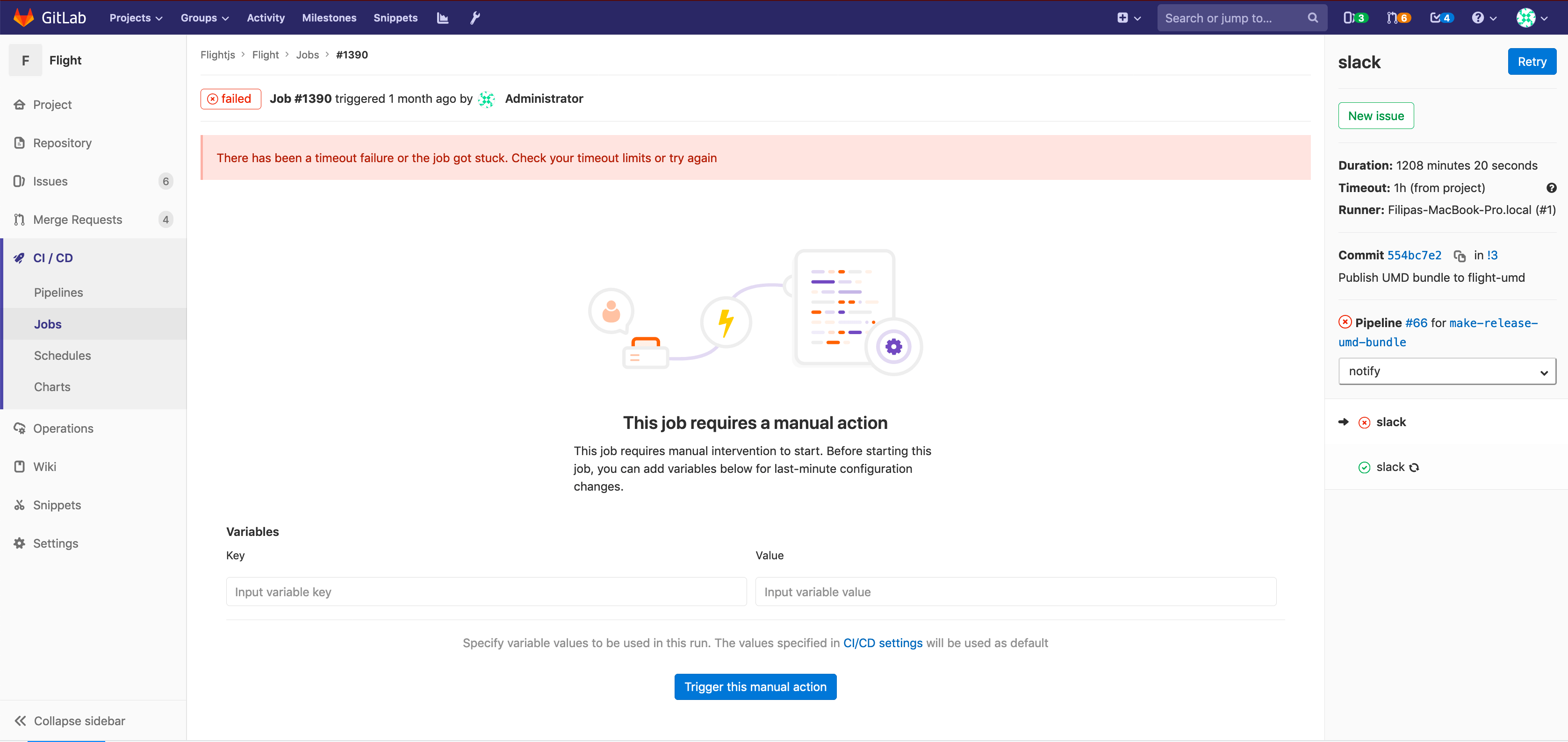

Specifying variables when running manual jobs

Introduced in GitLab 12.2.

When running manual jobs you can supply additional job specific variables.

You can do this from the job page of the manual job you want to run with additional variables. To access this page, click on the name of the manual job in the pipeline view, not the play ({play}) button.

This is useful when you want to alter the execution of a job that uses

custom environment variables.

Add a variable name (key) and value here to override the value defined in

the UI or .gitlab-ci.yml,

for a single run of the manual job.

Delay a job

Introduced in GitLab 11.4.

When you do not want to run a job immediately, you can use the when:delayed parameter to

delay a job's execution for a certain period.

This is especially useful for timed incremental rollout where new code is rolled out gradually.

For example, if you start rolling out new code and:

- Users do not experience trouble, GitLab can automatically complete the deployment from 0% to 100%.

- Users experience trouble with the new code, you can stop the timed incremental rollout by canceling the pipeline and rolling back to the last stable version.

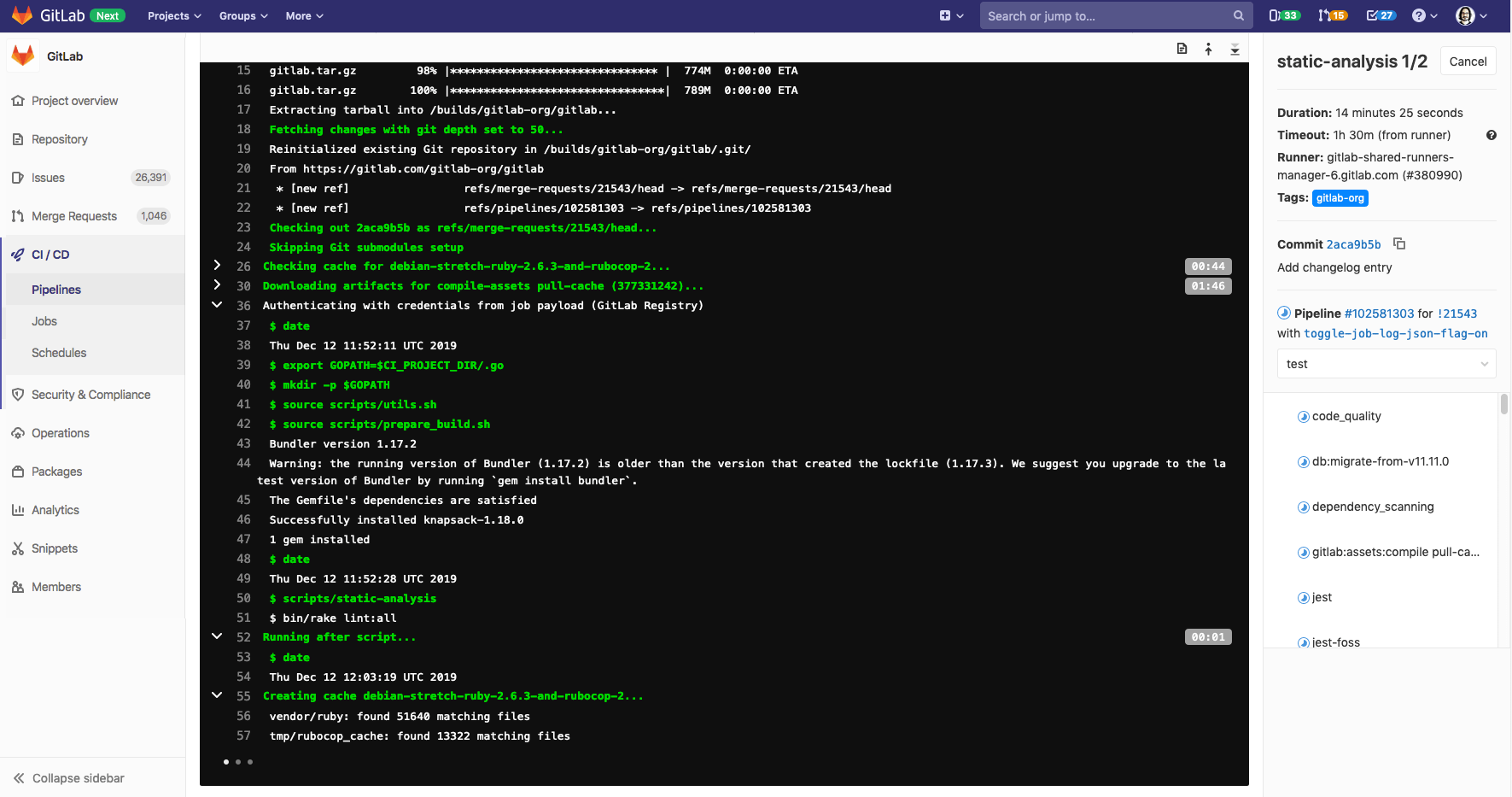

Expand and collapse job log sections

Introduced in GitLab 12.0.

Job logs are divided into sections that can be collapsed or expanded. Each section displays the duration.

In the following example:

- Two sections are collapsed and can be expanded.

- Three sections are expanded and can be collapsed.

Custom collapsible sections

Introduced in GitLab 12.0.

You can create collapsible sections in job logs by manually outputting special codes that GitLab uses to determine what sections to collapse:

- Section start marker:

section_start:UNIX_TIMESTAMP:SECTION_NAME\r\e[0K+TEXT_OF_SECTION_HEADER - Section end marker:

section_end:UNIX_TIMESTAMP:SECTION_NAME\r\e[0K

You must add these codes to the script section of the CI configuration. For example,

using echo:

job1:

script:

- echo -e "section_start:`date +%s`:my_first_section\r\e[0KHeader of the 1st collapsible section"

- echo 'this line should be hidden when collapsed'

- echo -e "section_end:`date +%s`:my_first_section\r\e[0K"

In the example above:

date +%s: The Unix timestamp (for example1560896352).my_first_section: The name given to the section.\r\e[0K: Prevents the section markers from displaying in the rendered (colored) job log, but they are displayed in the raw job log. To see them, in the top right of the job log, click {doc-text} (Show complete raw).\r: carriage return.\e[0K: clear line ANSI escape code.

Sample raw job log:

section_start:1560896352:my_first_section\r\e[0KHeader of the 1st collapsible section

this line should be hidden when collapsed

section_end:1560896353:my_first_section\r\e[0K

Pre-collapse sections

Introduced in GitLab 13.5.

You can make the job log automatically collapse collapsible sections by adding the collapsed option to the section start.

Add [collapsed=true] after the section name and before the \r. The section end marker

remains unchanged:

- Section start marker with

[collapsed=true]:section_start:UNIX_TIMESTAMP:SECTION_NAME[collapsed=true]\r\e[0K+TEXT_OF_SECTION_HEADER - Section end marker:

section_end:UNIX_TIMESTAMP:SECTION_NAME\r\e[0K

Add the updated section start text to the CI configuration. For example,

using echo:

job1:

script:

- echo -e "section_start:`date +%s`:my_first_section[collapsed=true]\r\e[0KHeader of the 1st collapsible section"

- echo 'this line should be hidden automatically after loading the job log'

- echo -e "section_end:`date +%s`:my_first_section\r\e[0K"

Visualize pipelines

Introduced in GitLab 8.11.

Pipelines can be complex structures with many sequential and parallel jobs.

To make it easier to understand the flow of a pipeline, GitLab has pipeline graphs for viewing pipelines and their statuses.

Pipeline graphs can be displayed in two different ways, depending on the page you access the graph from.

GitLab capitalizes the stages' names in the pipeline graphs.

Regular pipeline graphs

Regular pipeline graphs show the names of the jobs in each stage. Regular pipeline graphs can be found when you are on a single pipeline page. For example:

Multi-project pipeline graphs help you visualize the entire pipeline, including all cross-project inter-dependencies. (PREMIUM)

Pipeline mini graphs

Pipeline mini graphs take less space and can tell you at a quick glance if all jobs passed or something failed. The pipeline mini graph can be found when you navigate to:

- The pipelines index page.

- A single commit page.

- A merge request page.

Pipeline mini graphs allow you to see all related jobs for a single commit and the net result of each stage of your pipeline. This allows you to quickly see what failed and fix it.

Stages in pipeline mini graphs are collapsible. Hover your mouse over them and click to expand their jobs.

| Mini graph | Mini graph expanded |

|---|---|

|

|

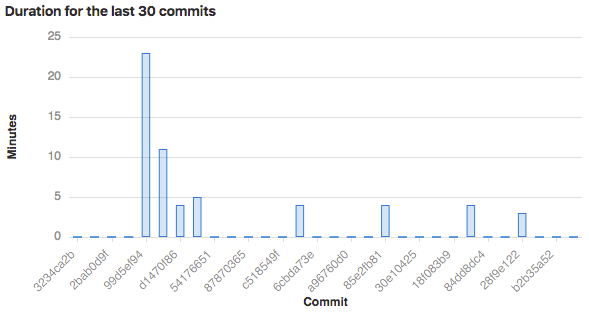

Pipeline success and duration charts

- Introduced in GitLab 3.1.1 as Commit Stats, and later renamed to Pipeline Charts.

- Renamed to CI / CD Analytics in GitLab 12.8.

GitLab tracks the history of your pipeline successes and failures, as well as how long each pipeline ran. To view this information, go to Analytics > CI / CD Analytics.

View successful pipelines:

View pipeline duration history:

Pipeline badges

Pipeline status and test coverage report badges are available and configurable for each project. For information on adding pipeline badges to projects, see Pipeline badges.

Pipelines API

GitLab provides API endpoints to:

- Perform basic functions. For more information, see Pipelines API.

- Maintain pipeline schedules. For more information, see Pipeline schedules API.

- Trigger pipeline runs. For more information, see: